My new book Bit by Bit: Social Research in the Digital Age is for social scientists who want to do more data science, data scientists who want to do more social science, and anyone interesting in the combination of these two fields. The central premise of Bit by Bit is that the digital age creates new opportunities for social research. As I was writing Bit by Bit, I also began thinking about how the digital age creates new opportunities for academic authors and publishers. The more I thought about it, the more it seemed that we could publish academic books in a more modern way by adopting some of the same techniques that I was writing about. I knew that I wanted Bit by Bit to be published in this new way, so I created a process called Open Review that has three goals: better books, higher sales, and increased access to knowledge. Then, much as doctors used to test new vaccines on themselves, I tested Open Review on my own book. This post is the first in a three part series about the Open Review of Bit by Bit. I will describe how Open Review led to a better book. After I explain the mechanics of Open Review, I’ll focus on three ways that Open Review led to a better book: annotations, implicit feedback, and psychological effects. The other posts in this series describe how Open Review led to higher sales and increased access to knowledge.

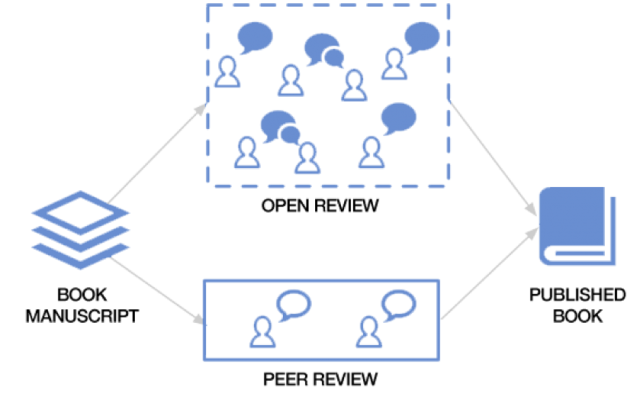

How Open Review works

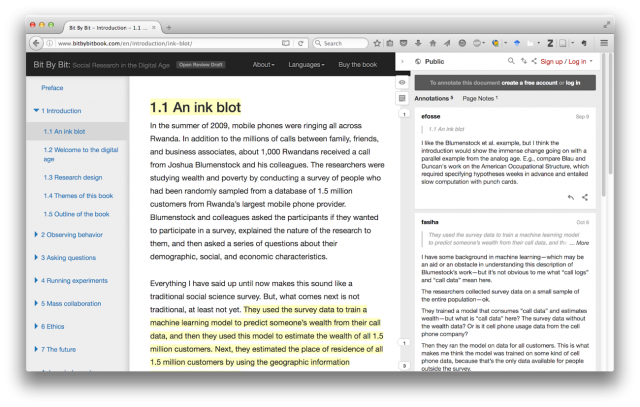

When I submitted my manuscript for peer review, I also created a website that hosted the manuscript for a parallel Open Review. During Open Review, anyone in the world could come and read the book and annotate it using hypothes.is, an open source annotation system. Here’s a picture of what it looked like to participants.

In addition to collecting annotations, the Open Review website also collected all kinds of other information. Once the peer review process was complete, I used the information from the peer review and the Open Review to improve the manuscript.

In the rest of this post, I’ll describe how the Open Review of Bit by Bit helped improve the book, and I’ll focus three things: annotations, implicit feedback, and psychological effects.

Annotations

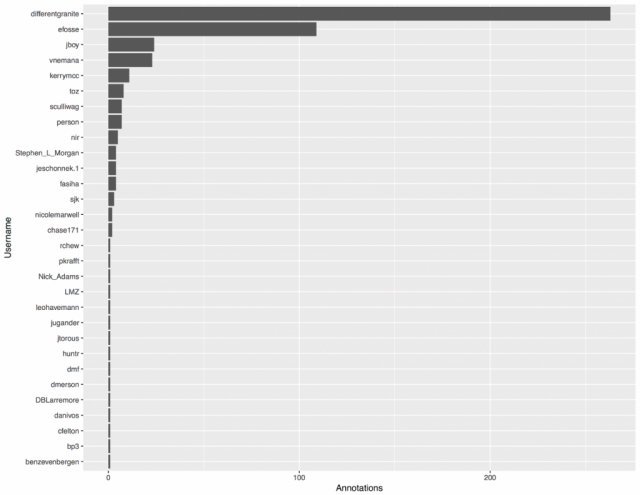

The most direct way that Open Review produced better books is through annotations. Readers used hypothes.is, an open source annotation system, to leave annotations like those shown in the image at the top of this post. During the Open Review period, 31 people contributed 495 annotations. These annotations were extremely helpful, and they led to many improvements in Bit by Bit. People often ask how these annotations compare to peer review, and I think it is best to think of them as complementary. The peer review was done by experts, and the feedback that I received often pushed me to write a slightly different book. The Open Review, on the other hand, was done by a mix of experts and novices, and the feedback was more focused on helping me write the book that I was trying to write. A further difference is the granularity of the feedback. During peer review, the feedback often involved removing or adding entire chapters, whereas doing Open Review the annotations were often focused on improving specific sentences. The most common annotations were related to clunky writing. For example, an annotation by differentgranite urged me avoid unnecessarily switching between “golf club” and “driver.” Likewise an annotation by fasiha pointed out that I was using “call data” and “call logs” in a way that was confusing. There were many, many small changes like these helped improve the manuscript. In addition to helping with writing, some annotations showed me that I had skipped a step in my argument. For example an annotation by kerrymcc pointed out that when I was writing about asking people questions, I skipped qualitative interviews and jumped right to surveys. In the revised manuscript, I’ve added a paragraph that explains this distinction and why I focus on surveys. The changes in the annotations described above might have come from a copy editor (although my copy editor was much more focused on grammar than writing). But, some of the annotations during Open Review could not have come from any copy editor. For example, an annotation by jugander pointed me to a paper I had not seen that was a wonderful illustration of a concept that I was trying to explain. Similarly, an annotation by pkrafft pointed out a very subtle problem in the way that I was describing the Computer Fraud and Abuse Act. These annotations were both from people with deep expertise in computational social science and they helped improve the intellectual content of the book. A skeptic might read these examples and not be very impressed. It is certainly true that the Open Review process did not lead to massive changes to the book. But, these examples—and dozens of other—are small improvements that I did make. Overall, I think that these many small improvements added up to a major improvement. Here are a few graphs summarizing the annotations.

Annotations by person: Most annotations were submitted by a small number of people.

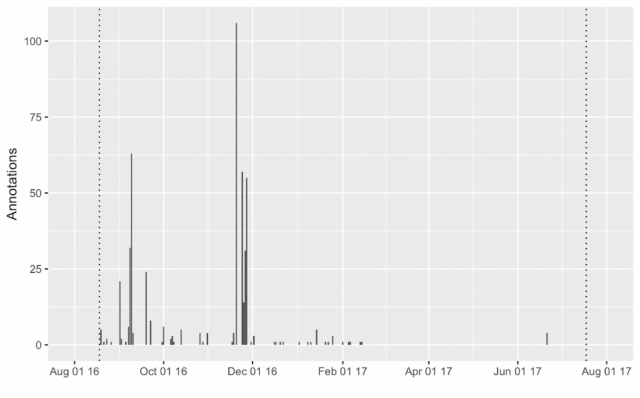

Annotations by date

Most annotations were submitted relatively early in the process. The spike in late November occurred when a single person read the entire manuscript and made many helpful annotations.

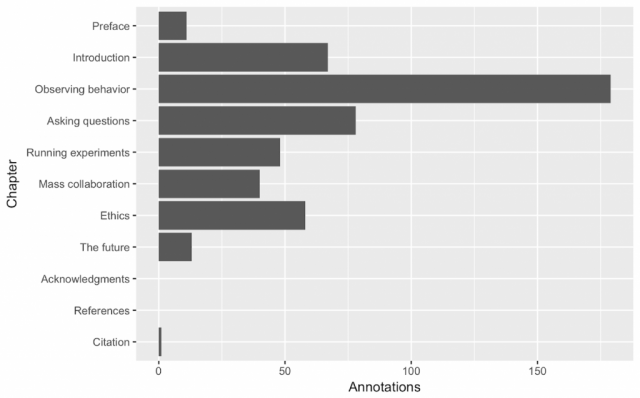

Annotations by chapter

Chapters later in the book received fewer annotations, but the ethics chapter was somewhat of an exception.

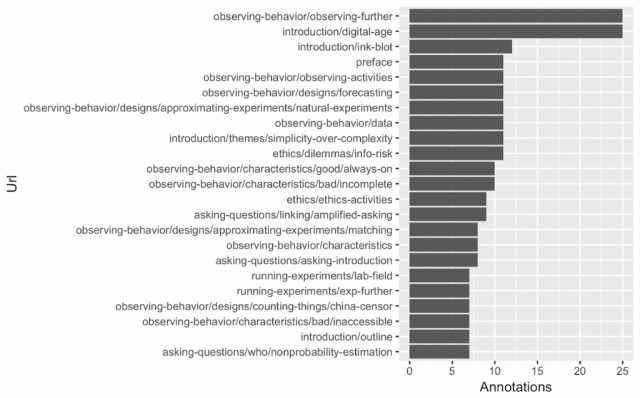

Annotations by url

Here are the 20 sections of the book that received the most annotations. In this case, I don’t see a clear pattern, but this might be helpful information for other projects.

One last thing to keep in mind about these annotations is that they underestimate the amount of feedback that I received because they only count annotations that received through the Open Review website. In fact, when people heard about Open Review, they sometimes invited me to give a talk or asked for a pdf of the manuscript on which they could comment. Basically, the Open Review website is a big sign that says “I want feedback” and that feedback that comes in a variety of forms in addition the annotations. One challenge with the annotations is that they come in continuously, but I tended to make my revisions in chunks. Therefore, there was often a long lag between when the annotation was made and when I responded. I think that participants in the Open Review process might have been more engaged if I had responded more quickly. I hope that future Open Review authors can figure out a better workflow for responding to and incorporating annotations into the manuscript.

Implicit feedback

In addition to the annotations, the second way that Open Review can lead to better books is through implicit feedback. That is, readers were voting with their clicks about which parts of the book are interesting or boring. And this “reader analytics” are apparently a hot thing in the commercial book publishing world. To be honest, this feedback proved less helpful than I had hoped, but that might be because I didn’t have a good dashboard in place. Here are five elements that I’d recommend for an Open Review dashboard (and all of them are possible with Google Analytics):

-

Which parts of the book are being read the most?

- What are the main entry pages?

- What are the main exit pages?

- What pages have the highest completion rate (based on scroll depth)?

- What pages have lowest completion rate (based on scroll depth)?

Psychological effects

There is one last way that Open Review led to a better a book: it made me more energized to make revisions. To be honest, for me, writing Bit by Bit was frustrating and exhausting. It was a huge struggle to get the point where the manuscript was ready for peer review and Open Review. Then, after receiving the feedback from peer review, I needed to revise the manuscript. Without the Open Review process—which I found exciting and rejuvenating—I’m not sure if I would have had the mental energy that was need to make revisions. In conclusion, Open Review definitely helped make Bit by Bit better, and there are many ways that Open Review could be improved. I want to say again that I’m grateful to everyone that contributed to the Open Review process: benzevenbergen, bp3, cfelton, chase171, banivos, DBLarremore, differentgranite, dmerson, dmf, efosse, fasiha, huntr, jboy, jeschonnek.1, jtorous, jugander, kerrymcc, leohavemann, LMZ, Nick_Adams, nicolemarwell, nir, person, pkrafft, rchew, sculliwag, sjk, Stephen_L_Morgan, toz, vnemana You can also read more about how the Open Review of Bit by Bit lead to higher sales and increased access to knowledge. And, you can put your own manuscript through Open Review using the Open Review Toolkit, either by downloading the open-source code or hiring one of the preferred partners. The Open Review Toolkit is supported by a grant from the Alfred P. Sloan Foundation.

About the author

Matthew J. Salganik is professor of sociology at Princeton University, where he is also affiliated with the Center for Information Technology Policy and the Center for Statistics and Machine Learning. His research has been funded by Microsoft, Facebook, and Google, and has been featured on NPR and in such publications as the New Yorker, the New York Times, and the Wall Street Journal.

Related posts

Matthew Salganik: The Open Review of Bit by Bit, Part 2—Higher sales

Matthew Salganik: The Open Review of Bit by Bit, Part 3—Increased access to knowledge